Resilience in the Cloud - Making Things Better

If you've read my last two posts in this series, you might be starting to reflect on systems that you manage or are currently building and how resilient they really are. If you haven't read the other posts in my AWS Resilience Series, here's where you can find them:

- Availability vs. Recoverability discusses the difference between making a system highly available and being able to recover from a failure when one inevitably does happen.

- Fault Isolation Boundaries covers what these boundaries are, how AWS uses them to provide us resilient cloud services, and how we can leverage that architecture to improve our own systems.

Now this third and final post in the series will discuss how you can take the learnings from the previous two and turn them into actionable improvements in your workloads.

Cloud resilience is one of those topics where lots of people have their own idea of what "good" looks like, and all of their paths to get there look a bit different. Along these paths there tend to be a number of different mistakes and misconceptions that are made, though not without good reason. Building resilient applications is complex, but an even greater challenge is that measuring success in resilience often requires an even greater level of investment and maturity than many applications require. Because of this, system resilience can be an elusive topic fraught with false senses of security and frustration.

So how do you evaluate your applications as they stand today, and make a plan for where you want your architecture to be tomorrow? How do you ultimately know what "good" should look like for you?

I've spoken on this topic a few times in the past (at least once publicly), and historically I've answered this question with the following four steps:

- Start with backups

- Identify potential failure points

- Implement mitigations and redundancies

- Plan for disaster and test

If you do just one thing, it should be addressing your backup strategy. Today's technology landscape has a huge focus and emphasis on data; data is the foundation of today's business and it's crucial to ensure that data is protected. Data loss can happen in a number of different ways, but you can distill the problem down by looking at every data storage resource in your application and asking yourself: "what would I do if this data disappeared tomorrow?". If the answer is anything besides "I could easily recreate it", you almost certainly need to back it up.

AWS has services with some amazingly good data durability SLAs, but those serve the AWS side of the shared responsibility model, not ours as customers. Having a well-designed backup strategy should protect you against all data loss: storage medium failure/corruption, inadvertent modification or deletion, or even ransomware attacks. Ensure that you're thinking of all of these when designing a data protection strategy and don't just rely on vendor durability SLAs.

Moving beyond backups, next up is to identify other potential failure points in your architecture. For a long time, I've generally followed a similar methodical approach to backups: walk your infrastructure, identify how each component could possibly fail, then mitigate that failure. It turns out that some of the brilliant minds at AWS wrote up similar guidance with way more structure and rigor than I ever did in their prescriptive guidance titled: Resilience analysis framework. If you're working to improve system reliability at any level of scale, I would highly recommend reading about this framework and understanding how it can be best applied in your own organization.

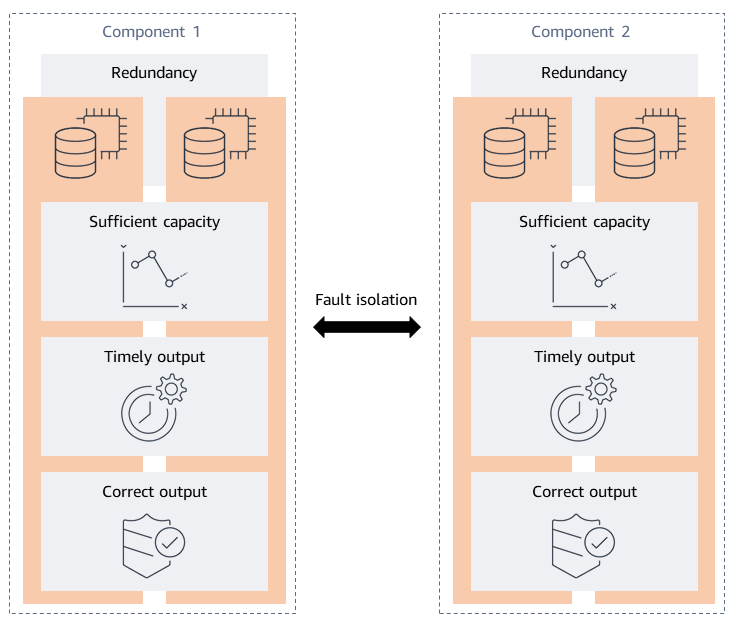

At a high-level, the Resilience analysis framework focuses on the following characteristics of highly available systems: redundancy, sufficient capacity, timely and correct output, and fault isolation. The expectation is that a system that exhibits these characteristics would be considered "resilient". For each of these characteristics, the framework prescribes failure categories which help connect the dots from your infrastructure itself and actually embodying these characteristics.

Relationships of the desired resilience properties reprinted from Resilience analysis framework - Overview of the framework

Relationships of the desired resilience properties reprinted from Resilience analysis framework - Overview of the framework

When it comes to applying the framework, the first step that I always recommend to teams is to start with an up-to-date architecture context diagram that shows all of the pertinent components of your system. I believe that the most value of framework application is had when it prompts conversations amongst teams (versus a solo effort), and having a diagram that everyone can use as a frame of reference is key to keeping the dialogue focused and productive.

From this point, the framework discusses focusing on user stories to evaluate individual business processes with the focus on the value to the end user. For many applications I think this is a great approach since ultimately your users care whether or not they can use your system for useful work; they're usually not concerned with the specifics of your API server. One of the understated benefits of the framework is that it easily scales up and down to systems of all different sizes and scopes. For larger (and particularly older) systems where ownership doesn't necessarily lie with folks that built all of that functionality, attempting to focus on user stories might be a challenge. In these cases, you can still get a ton of value out of evaluating at the component and interaction level instead.

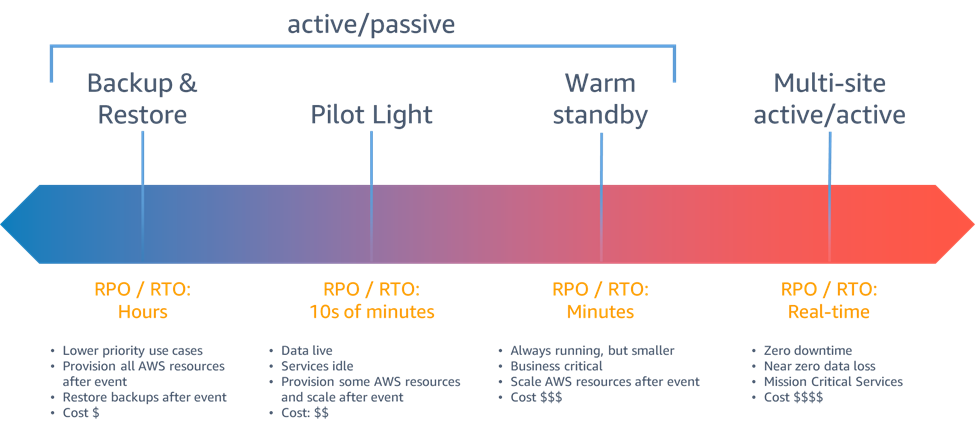

This blog post is not intended to get into all of the detail of the framework, you should really go read what the experts have to say here, but this does get us to another really important discussion point: how far do I go with my mitigations? The framework has an entire page titled "Understanding trade-offs and risks" which discusses this, but I have some of my own thoughts to add on top of that. In my discussions on this topic in the past, I've talked about the notion of resilience being a spectrum. As you move to higher availability (and thus, higher levels of resilience), the cost in both cloud spend and operations overhead increases dramatically. In-fact, AWS even includes a spectrum in their Disaster Recovery of Workloads on AWS: Recovery in the Cloud whitepaper.

Disaster recovery strategies figure 6 reprinted from Disaster Recovery of Workloads on AWS: Recovery in the Cloud

Disaster recovery strategies figure 6 reprinted from Disaster Recovery of Workloads on AWS: Recovery in the Cloud

Taking the point I made earlier about cost, you should expect that as you move to the right on this spectrum that your costs will increase. This means that you should consider the criticality of your system when thinking about the appropriate level of mitigations to apply. Many companies use a tiering system to classify the criticality of their applications. If yours does, you will probably be best suited to mapping that tiering into a certain type of resilience configuration.

For most applications, multi-region active/active configurations is going to be the most expensive and the most complex to implement. Especially for systems that aren't "cloud native", it's more rare to find a system that supports running in a multi-active configuration really well. Newer AWS capabilities such as DynamoDB Global Tables and Aurora DSQL are making this easier for applications that can build for this from the beginning, however. Even if you aren't using these technologies, you can still make your systems more resilient by applying mitigations at the appropriate level of investment for the value a system is providing to your customers or business.

The final point that I'll make is that you should always test any recovery plans and mitigation strategies that you implement on a regular basis. Just like you would test a piece of software to ensure it behaves the way you intend, you should ensure that your resilience strategy is actually working for you. The last thing you want in the midst of an outage is to discover that your recovery plan relies on some IAM permission or security group configuration that you didn't account for, and now you're fighting two fires instead of one. Test as close to production as you possibly can, and test often. AWS Fault Injection Service can help with this step by making it easy to setup fault experiments and seeing both how your infrastructure and runbooks behave to address a real failure in a safe manner.

Are you really confident that you've done everything you can to ensure your on-call pager never rings, or are you worrying that the next big incident could be right around the corner? Hopefully the information in this series have helped you answer these questions and make things better for both your users and your operations team. How do you and your teams handle this? Let me know in the comments!